hadoop集群负载高导致的flume问题

flume->hdfs source是tail、channel是memory、sink是hdfs

flume端报错:

2015-01-29 01:55:58,424 (SinkRunner-PollingRunner-DefaultSinkProcessor) [WARN - org.apache.flume.sink.hdfs.BucketWriter.append(BucketWriter.java:601)] Caught IOException while closing file (/user/etl/flume/live_pt/20150129/01//live_pt_bx_14_96.1422466474360.tmp). Exception follows.

java.net.SocketTimeoutException: 70000 millis timeout while waiting for channel to be ready for read. ch : java.nio.channels.SocketChannel[connected local=/10.16.14.96:57231 remote=/10.10.78.116:50011]

at org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:164)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:118)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at org.apache.hadoop.hdfs.protocolPB.PBHelper.vintPrefixed(PBHelper.java:1998)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.transfer(DFSOutputStream.java:1073)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.addDatanode2ExistingPipeline(DFSOutputStream.java:1040)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.setupPipelineForAppendOrRecovery(DFSOutputStream.java:1184)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.processDatanodeError(DFSOutputStream.java:933)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:487)

2015-01-29 01:55:59,129 (SinkRunner-PollingRunner-DefaultSinkProcessor) [WARN - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:463)] HDFS IO error

java.net.SocketTimeoutException: 70000 millis timeout while waiting for channel to be ready for read. ch : java.nio.channels.SocketChannel[connected local=/10.16.14.96:57231 remote=/10.10.78.116:50011]

at org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:164)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:118)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at org.apache.hadoop.hdfs.protocolPB.PBHelper.vintPrefixed(PBHelper.java:1998)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.transfer(DFSOutputStream.java:1073)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.addDatanode2ExistingPipeline(DFSOutputStream.java:1040)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.setupPipelineForAppendOrRecovery(DFSOutputStream.java:1184)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.processDatanodeError(DFSOutputStream.java:933)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:487)

2015-01-29 01:56:05,153 (SinkRunner-PollingRunner-DefaultSinkProcessor) [ERROR - org.apache.flume.sink.hdfs.AbstractHDFSWriter.isUnderReplicated(AbstractHDFSWriter.java:95)] Unexpected error while checking replication factor

java.lang.reflect.InvocationTargetException

at sun.reflect.GeneratedMethodAccessor7.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.flume.sink.hdfs.AbstractHDFSWriter.getNumCurrentReplicas(AbstractHDFSWriter.java:161)

at org.apache.flume.sink.hdfs.AbstractHDFSWriter.isUnderReplicated(AbstractHDFSWriter.java:81)

at org.apache.flume.sink.hdfs.BucketWriter.shouldRotate(BucketWriter.java:623)

at org.apache.flume.sink.hdfs.BucketWriter.append(BucketWriter.java:558)

at org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:426)

at org.apache.flume.sink.DefaultSinkProcessor.process(DefaultSinkProcessor.java:68)

at org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:147)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.net.SocketTimeoutException: 70000 millis timeout while waiting for channel to be ready for read. ch : java.nio.channels.SocketChannel[connected local=/10.16.14.96:57231 remote=/10.10.78.116:50011]

at org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:164)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:118)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at org.apache.hadoop.hdfs.protocolPB.PBHelper.vintPrefixed(PBHelper.java:1998)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.transfer(DFSOutputStream.java:1073)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.addDatanode2ExistingPipeline(DFSOutputStream.java:1040)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.setupPipelineForAppendOrRecovery(DFSOutputStream.java:1184)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.processDatanodeError(DFSOutputStream.java:933) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:487)

报错持续了半个小时,然后抛出异常,进程退出

2015-01-29 01:58:18,025 (SinkRunner-PollingRunner-DefaultSinkProcessor) [ERROR - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:467)] process failed

java.lang.OutOfMemoryError: Java heap space

at java.util.Arrays.copyOfRange(Arrays.java:2694)

at java.lang.String.<init>(String.java:203)

at java.lang.StringBuffer.toString(StringBuffer.java:561)

at java.io.StringWriter.toString(StringWriter.java:210)

at org.apache.log4j.DefaultThrowableRenderer.render(DefaultThrowableRenderer.java:64)

at org.apache.log4j.spi.ThrowableInformation.getThrowableStrRep(ThrowableInformation.java:87)

at org.apache.log4j.spi.LoggingEvent.getThrowableStrRep(LoggingEvent.java:413)

at org.apache.log4j.WriterAppender.subAppend(WriterAppender.java:313)

at org.apache.log4j.WriterAppender.append(WriterAppender.java:162)

at org.apache.log4j.AppenderSkeleton.doAppend(AppenderSkeleton.java:251)

at org.apache.log4j.helpers.AppenderAttachableImpl.appendLoopOnAppenders(AppenderAttachableImpl.java:66)

at org.apache.log4j.Category.callAppenders(Category.java:206)

at org.apache.log4j.Category.forcedLog(Category.java:391)

at org.apache.log4j.Category.log(Category.java:856)

at org.slf4j.impl.Log4jLoggerAdapter.error(Log4jLoggerAdapter.java:576)

at org.apache.flume.sink.hdfs.AbstractHDFSWriter.isUnderReplicated(AbstractHDFSWriter.java:95)

at org.apache.flume.sink.hdfs.BucketWriter.shouldRotate(BucketWriter.java:623)

at org.apache.flume.sink.hdfs.BucketWriter.append(BucketWriter.java:558)

at org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:426)

at org.apache.flume.sink.DefaultSinkProcessor.process(DefaultSinkProcessor.java:68)

at org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:147)

at java.lang.Thread.run(Thread.java:745)

Exception in thread "SinkRunner-PollingRunner-DefaultSinkProcessor" java.lang.OutOfMemoryError: Java heap space

at java.util.Arrays.copyOfRange(Arrays.java:2694)

at java.lang.String.<init>(String.java:203)

at java.lang.StringBuffer.toString(StringBuffer.java:561)

at java.io.StringWriter.toString(StringWriter.java:210)

at org.apache.log4j.DefaultThrowableRenderer.render(DefaultThrowableRenderer.java:64)

at org.apache.log4j.spi.ThrowableInformation.getThrowableStrRep(ThrowableInformation.java:87)

at org.apache.log4j.spi.LoggingEvent.getThrowableStrRep(LoggingEvent.java:413)

at org.apache.log4j.WriterAppender.subAppend(WriterAppender.java:313)

at org.apache.log4j.WriterAppender.append(WriterAppender.java:162)

at org.apache.log4j.AppenderSkeleton.doAppend(AppenderSkeleton.java:251)

at org.apache.log4j.helpers.AppenderAttachableImpl.appendLoopOnAppenders(AppenderAttachableImpl.java:66)

at org.apache.log4j.Category.callAppenders(Category.java:206)

at org.apache.log4j.Category.forcedLog(Category.java:391)

at org.apache.log4j.Category.log(Category.java:856)

at org.slf4j.impl.Log4jLoggerAdapter.error(Log4jLoggerAdapter.java:576)

at org.apache.flume.sink.hdfs.AbstractHDFSWriter.isUnderReplicated(AbstractHDFSWriter.java:95)

at org.apache.flume.sink.hdfs.BucketWriter.shouldRotate(BucketWriter.java:623)

at org.apache.flume.sink.hdfs.BucketWriter.append(BucketWriter.java:558)

at org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:426)

at org.apache.flume.sink.DefaultSinkProcessor.process(DefaultSinkProcessor.java:68)

at org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:147) at java.lang.Thread.run(Thread.java:745)

最后进程失败的原因很清楚,是由于sink端无法将数据放到hdfs,造成channel拥堵,配置了channel内存的最大容量,超出了所以报了内存溢出的异常,然后整个flume进程都失败了

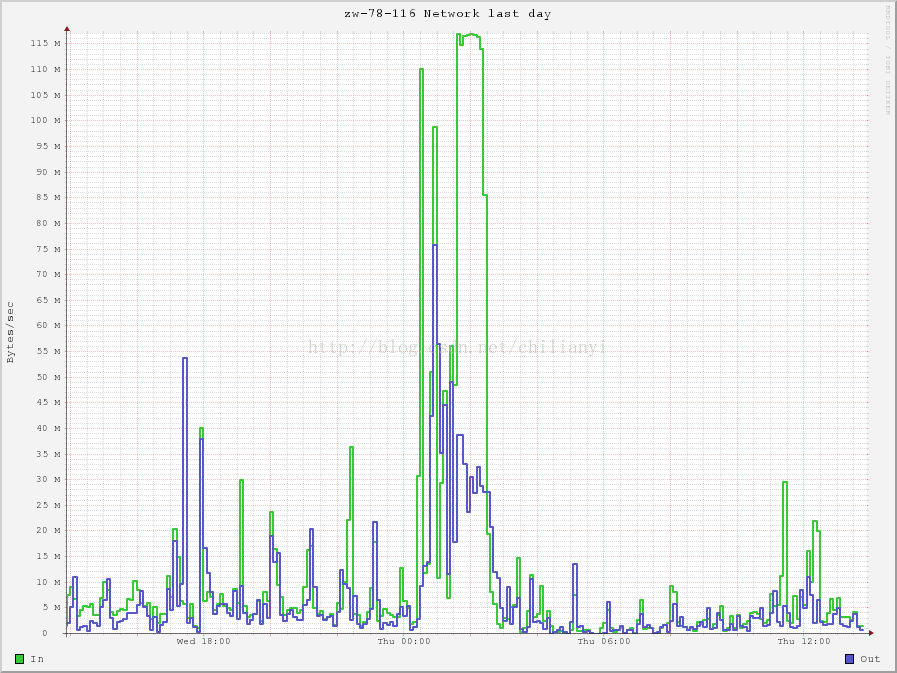

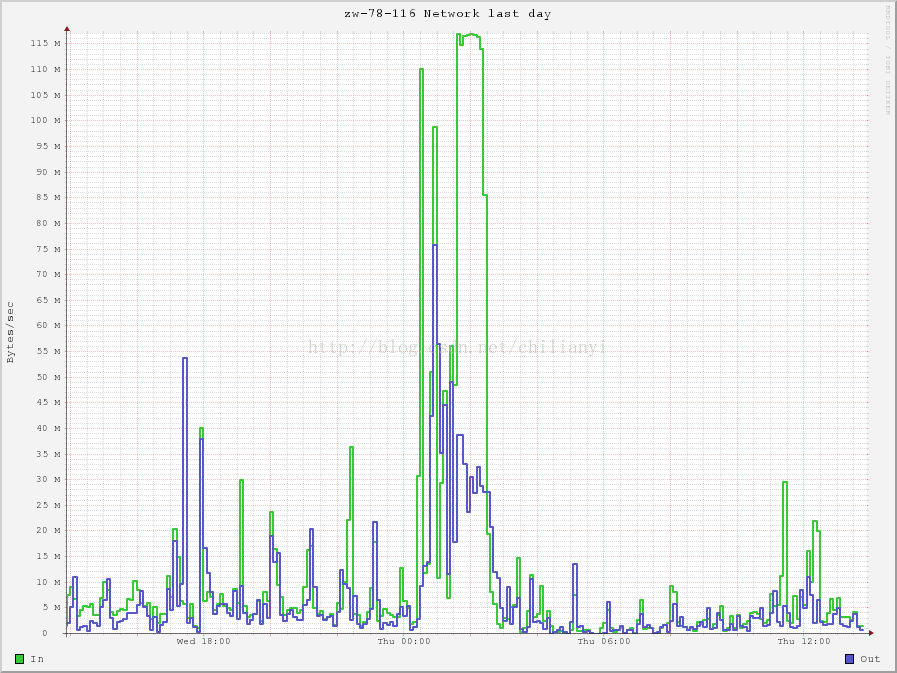

分析导致原因产生的因素:报错是socket连接超时,默认的dfs.client.socket-timeout是60s,由于那段时间所连接的机器10.10.78.116网络带宽ganglia监控图如下,可以看出1点半到2点半之间带宽是满的,所以才会造成写得socket连接超时。

![]()

分析集群原因: 1. 使用的apache2.5.0版本hadoop,默认心跳间隔是1s,导致一台机器可能分到的同一任务的reduce过多,而reduce的suffle过程会有大量的写操作。 2. 客户端没有配置map输出结果压缩。

修改内容: nodemanager 配置: <property>

<name>yarn.resourcemanager.nodemanagers.heartbeat-interval-ms</name> <value>3000</value> </property>

客户端配置 <property>

<name>mapreduce.map.output.compress</name>

<value>true</value>

</property>

<property>

<name>mapreduce.map.output.compress.codec</name>

<value>com.hadoop.compression.lzo.LzoCodec</value> </property> <property>

<name>dfs.client.socket-timeout</name>

<value>60000</value> </property> 阅读更多

2015-01-29 01:55:58,424 (SinkRunner-PollingRunner-DefaultSinkProcessor) [WARN - org.apache.flume.sink.hdfs.BucketWriter.append(BucketWriter.java:601)] Caught IOException while closing file (/user/etl/flume/live_pt/20150129/01//live_pt_bx_14_96.1422466474360.tmp). Exception follows.

java.net.SocketTimeoutException: 70000 millis timeout while waiting for channel to be ready for read. ch : java.nio.channels.SocketChannel[connected local=/10.16.14.96:57231 remote=/10.10.78.116:50011]

at org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:164)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:118)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at org.apache.hadoop.hdfs.protocolPB.PBHelper.vintPrefixed(PBHelper.java:1998)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.transfer(DFSOutputStream.java:1073)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.addDatanode2ExistingPipeline(DFSOutputStream.java:1040)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.setupPipelineForAppendOrRecovery(DFSOutputStream.java:1184)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.processDatanodeError(DFSOutputStream.java:933)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:487)

2015-01-29 01:55:59,129 (SinkRunner-PollingRunner-DefaultSinkProcessor) [WARN - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:463)] HDFS IO error

java.net.SocketTimeoutException: 70000 millis timeout while waiting for channel to be ready for read. ch : java.nio.channels.SocketChannel[connected local=/10.16.14.96:57231 remote=/10.10.78.116:50011]

at org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:164)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:118)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at org.apache.hadoop.hdfs.protocolPB.PBHelper.vintPrefixed(PBHelper.java:1998)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.transfer(DFSOutputStream.java:1073)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.addDatanode2ExistingPipeline(DFSOutputStream.java:1040)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.setupPipelineForAppendOrRecovery(DFSOutputStream.java:1184)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.processDatanodeError(DFSOutputStream.java:933)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:487)

2015-01-29 01:56:05,153 (SinkRunner-PollingRunner-DefaultSinkProcessor) [ERROR - org.apache.flume.sink.hdfs.AbstractHDFSWriter.isUnderReplicated(AbstractHDFSWriter.java:95)] Unexpected error while checking replication factor

java.lang.reflect.InvocationTargetException

at sun.reflect.GeneratedMethodAccessor7.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.flume.sink.hdfs.AbstractHDFSWriter.getNumCurrentReplicas(AbstractHDFSWriter.java:161)

at org.apache.flume.sink.hdfs.AbstractHDFSWriter.isUnderReplicated(AbstractHDFSWriter.java:81)

at org.apache.flume.sink.hdfs.BucketWriter.shouldRotate(BucketWriter.java:623)

at org.apache.flume.sink.hdfs.BucketWriter.append(BucketWriter.java:558)

at org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:426)

at org.apache.flume.sink.DefaultSinkProcessor.process(DefaultSinkProcessor.java:68)

at org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:147)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.net.SocketTimeoutException: 70000 millis timeout while waiting for channel to be ready for read. ch : java.nio.channels.SocketChannel[connected local=/10.16.14.96:57231 remote=/10.10.78.116:50011]

at org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:164)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:118)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at org.apache.hadoop.hdfs.protocolPB.PBHelper.vintPrefixed(PBHelper.java:1998)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.transfer(DFSOutputStream.java:1073)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.addDatanode2ExistingPipeline(DFSOutputStream.java:1040)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.setupPipelineForAppendOrRecovery(DFSOutputStream.java:1184)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.processDatanodeError(DFSOutputStream.java:933) at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:487)

报错持续了半个小时,然后抛出异常,进程退出

2015-01-29 01:58:18,025 (SinkRunner-PollingRunner-DefaultSinkProcessor) [ERROR - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:467)] process failed

java.lang.OutOfMemoryError: Java heap space

at java.util.Arrays.copyOfRange(Arrays.java:2694)

at java.lang.String.<init>(String.java:203)

at java.lang.StringBuffer.toString(StringBuffer.java:561)

at java.io.StringWriter.toString(StringWriter.java:210)

at org.apache.log4j.DefaultThrowableRenderer.render(DefaultThrowableRenderer.java:64)

at org.apache.log4j.spi.ThrowableInformation.getThrowableStrRep(ThrowableInformation.java:87)

at org.apache.log4j.spi.LoggingEvent.getThrowableStrRep(LoggingEvent.java:413)

at org.apache.log4j.WriterAppender.subAppend(WriterAppender.java:313)

at org.apache.log4j.WriterAppender.append(WriterAppender.java:162)

at org.apache.log4j.AppenderSkeleton.doAppend(AppenderSkeleton.java:251)

at org.apache.log4j.helpers.AppenderAttachableImpl.appendLoopOnAppenders(AppenderAttachableImpl.java:66)

at org.apache.log4j.Category.callAppenders(Category.java:206)

at org.apache.log4j.Category.forcedLog(Category.java:391)

at org.apache.log4j.Category.log(Category.java:856)

at org.slf4j.impl.Log4jLoggerAdapter.error(Log4jLoggerAdapter.java:576)

at org.apache.flume.sink.hdfs.AbstractHDFSWriter.isUnderReplicated(AbstractHDFSWriter.java:95)

at org.apache.flume.sink.hdfs.BucketWriter.shouldRotate(BucketWriter.java:623)

at org.apache.flume.sink.hdfs.BucketWriter.append(BucketWriter.java:558)

at org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:426)

at org.apache.flume.sink.DefaultSinkProcessor.process(DefaultSinkProcessor.java:68)

at org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:147)

at java.lang.Thread.run(Thread.java:745)

Exception in thread "SinkRunner-PollingRunner-DefaultSinkProcessor" java.lang.OutOfMemoryError: Java heap space

at java.util.Arrays.copyOfRange(Arrays.java:2694)

at java.lang.String.<init>(String.java:203)

at java.lang.StringBuffer.toString(StringBuffer.java:561)

at java.io.StringWriter.toString(StringWriter.java:210)

at org.apache.log4j.DefaultThrowableRenderer.render(DefaultThrowableRenderer.java:64)

at org.apache.log4j.spi.ThrowableInformation.getThrowableStrRep(ThrowableInformation.java:87)

at org.apache.log4j.spi.LoggingEvent.getThrowableStrRep(LoggingEvent.java:413)

at org.apache.log4j.WriterAppender.subAppend(WriterAppender.java:313)

at org.apache.log4j.WriterAppender.append(WriterAppender.java:162)

at org.apache.log4j.AppenderSkeleton.doAppend(AppenderSkeleton.java:251)

at org.apache.log4j.helpers.AppenderAttachableImpl.appendLoopOnAppenders(AppenderAttachableImpl.java:66)

at org.apache.log4j.Category.callAppenders(Category.java:206)

at org.apache.log4j.Category.forcedLog(Category.java:391)

at org.apache.log4j.Category.log(Category.java:856)

at org.slf4j.impl.Log4jLoggerAdapter.error(Log4jLoggerAdapter.java:576)

at org.apache.flume.sink.hdfs.AbstractHDFSWriter.isUnderReplicated(AbstractHDFSWriter.java:95)

at org.apache.flume.sink.hdfs.BucketWriter.shouldRotate(BucketWriter.java:623)

at org.apache.flume.sink.hdfs.BucketWriter.append(BucketWriter.java:558)

at org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:426)

at org.apache.flume.sink.DefaultSinkProcessor.process(DefaultSinkProcessor.java:68)

at org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:147) at java.lang.Thread.run(Thread.java:745)

最后进程失败的原因很清楚,是由于sink端无法将数据放到hdfs,造成channel拥堵,配置了channel内存的最大容量,超出了所以报了内存溢出的异常,然后整个flume进程都失败了

分析导致原因产生的因素:报错是socket连接超时,默认的dfs.client.socket-timeout是60s,由于那段时间所连接的机器10.10.78.116网络带宽ganglia监控图如下,可以看出1点半到2点半之间带宽是满的,所以才会造成写得socket连接超时。

分析集群原因: 1. 使用的apache2.5.0版本hadoop,默认心跳间隔是1s,导致一台机器可能分到的同一任务的reduce过多,而reduce的suffle过程会有大量的写操作。 2. 客户端没有配置map输出结果压缩。

修改内容: nodemanager 配置: <property>

<name>yarn.resourcemanager.nodemanagers.heartbeat-interval-ms</name> <value>3000</value> </property>

客户端配置 <property>

<name>mapreduce.map.output.compress</name>

<value>true</value>

</property>

<property>

<name>mapreduce.map.output.compress.codec</name>

<value>com.hadoop.compression.lzo.LzoCodec</value> </property> <property>

<name>dfs.client.socket-timeout</name>

<value>60000</value> </property> 阅读更多

声明:该文观点仅代表作者本人,入门客AI创业平台信息发布平台仅提供信息存储空间服务,如有疑问请联系rumenke@qq.com。

- 上一篇:没有了

- 下一篇:没有了